Implementing the Google's autocomplete feature with ngram language models - Part 5

In this post, we will implement a simple n-gram model in C# using a limited corpus. This will highlight the key considerations to keep in mind when it comes to coding.

The code presented here likely contains errors and would need to be adapted for use in a production environment.

Let us assume we have collected a corpus of queries. For instance, in the case of Google, this corpus could consist of the history of all queries performed by users. From this data, we need to calculate the probabilities for the n-gram models and we will discover that, as is often the case, we need to adapt the theory to practical considerations to create a functional program.

What challenges arise when working directly with probabilities in computer science ?

Probabilities are values that range between 0 and 1. While this is not an issue in itself, problems can arise when these numbers become extremely small, as representing them accurately in floating-point arithmetic becomes increasingly difficult. To make matters worse, as we discussed in the previous post, we often need to multiply these probabilities together (chain rule of probability), resulting in even smaller values. This can ultimately lead to an underflow error.

The solution is to use logarithmic probabilities instead of raw probabilities, converting multiplications into additions.

By using log probabilities instead of raw probabilities, we get numbers that are not as small.

Speech and Language Processing

Defining an ngram

An n-gram can be straightforwardly modeled in C#.

1public class NGram

2{

3 public string Pattern { get; private set; }

4

5 public Dictionary<string, int> NextWords { get; set; }

6

7 public NGram(string pattern)

8 {

9 Pattern = pattern.ToLower();

10 NextWords = new Dictionary<string, int>();

11 }

12

13 public void AddNextWord(string word)

14 {

15 var existing = NextWords.ContainsKey(word);

16 if (existing)

17 {

18 NextWords[word] = NextWords[word] + 1;

19 }

20 else

21 {

22 NextWords.Add(word, 1);

23 }

24 }

25

26 public double GiveProbabilityFor(string word)

27 {

28 if (!NextWords.ContainsKey(word)) return 0.0;

29

30 var sum = NextWords.Values.Sum();

31 return (double)NextWords[word] / (double)sum;

32 }

33}

An n-gram, at its core, consists of a pattern and the various possible next words that follow it, each associated with a probability. This class also includes two methods: one for easily adding a new word and another for computing the associated probabilities.

Defining an ngram manager

The NGramManager class allows for the combination of different n-grams to calculate probabilities for an entire sentence. Its primary functions include assigning probabilities based on a specific corpus and providing the most likely next words (ranked by probability) for a given pattern.

In a real-world scenario, the two functions—assigning probabilities and predicting the next words—would typically be handled separately, often implemented as two distinct projects and possibly managed by two different teams.

1public class NGramManager

2{

3 public List<NGram> NGrams { get; private set; } = new List<NGram>();

4

5 public List<string> Vocabulary { get; private set; } = new List<string>();

6

7 public void AssignProbabilitiesFrom(List<string> corpus)

8 {

9 foreach (string line in corpus)

10 {

11 var ms = line.Split(' ', StringSplitOptions.RemoveEmptyEntries); var l = ms.Length;

12 ms = ms.Prepend("s").Append("/s").ToArray();

13

14 for (var i = 0; i <= l; i++)

15 {

16 var word = ms[i].ToLower();

17

18 if (!Vocabulary.Contains(word))

19 Vocabulary.Add(word);

20

21 var ngram = NGrams.FirstOrDefault(t => t.Pattern == word);

22 if (ngram == null)

23 {

24 ngram = new NGram(word);

25 ngram.AddNextWord(ms[i + 1].ToLower());

26 NGrams.Add(ngram);

27 }

28 else

29 {

30 ngram.AddNextWord(ms[i + 1].ToLower());

31 }

32 }

33 }

34 }

35

36 public List<string> GiveNextWordsFrom(string current)

37 {

38 var patterns = current.Split(' ', StringSplitOptions.RemoveEmptyEntries).Select(t => t.ToLower()).ToArray(); var l = patterns.Length;

39 patterns = patterns.Prepend("s").ToArray();

40

41 var probsum = 0.0;

42 for (var i = 0; i < l; i++)

43 {

44 var pattern = patterns[i];

45

46 var ng = NGrams.FirstOrDefault(x => x.Pattern == pattern);

47 if (ng == null) return new List<string>();

48

49 var next = ng.GiveProbabilityFor(patterns[i+1]);

50 if(next == 0.0) return new List<string>();

51

52 probsum = probsum + Math.Log(next);

53 }

54

55 var lastWord = patterns[l];

56 var ngram = NGrams.FirstOrDefault(x => x.Pattern == lastWord);

57 if (ngram == null) return new List<string>();

58

59 var list = new Dictionary<string, double>();

60 foreach (var word in Vocabulary)

61 {

62 var next = ngram.GiveProbabilityFor(word);

63 if (next > 0)

64 list.Add(word, probsum + Math.Log(next));

65 }

66

67 return list.OrderByDescending(p => p.Value).Select(x => x.Key).ToList();

68 }

69}

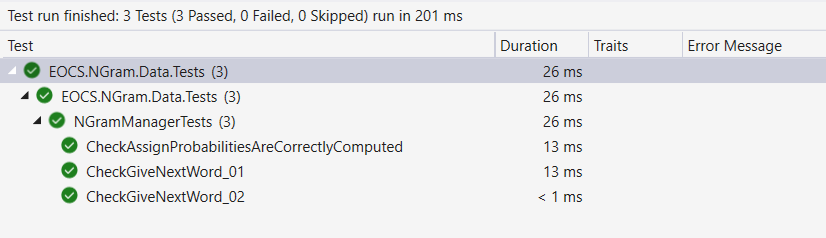

Testing our program

To verify the functionality of our program, we need to create and execute unit tests.

1public class NGramManagerTests

2{

3 [Test]

4 public void CheckAssignProbabilitiesAreCorrectlyComputed()

5 {

6 // Act

7 var manager = new NGramManager();

8 var corpus = new List<string>() { "I am silly", "I am silly", "I am clever", "I am sick", "I am mad", "I am tall", "I am tall", "I am tall", "I have a headache" };

9

10 // Arrange

11 manager.AssignProbabilitiesFrom(corpus);

12

13 // Assert

14 Assert.AreEqual(manager.Vocabulary.Count, 11);

15 Assert.AreEqual(manager.NGrams.Count, 11);

16 }

17

18 [Test]

19 public void CheckGiveNextWord_01()

20 {

21 // Act

22 var manager = new NGramManager();

23 var corpus = new List<string>() { "I am silly", "I am silly", "I am silly", "I am clever", "I am mad", "I am tall", "I am tall", "I am tall", "I am tall", "I am tall", "I have a silly dog", "How to become an engineer" };

24

25 // Arrange

26 manager.AssignProbabilitiesFrom(corpus);

27

28 var nexts = manager.GiveNextWordsFrom("I am");

29

30 // Assert

31 Assert.AreEqual(nexts.Take(3), new List<string>() { "tall", "silly", "clever" });

32 }

33

34 [Test]

35 public void CheckGiveNextWord_02()

36 {

37 // Act

38 var manager = new NGramManager();

39 var corpus = new List<string>() { "I am silly", "I am silly", "I am silly", "I am clever", "I am mad", "I am tall", "I am tall", "I am tall", "I am tall", "I am tall", "I have a silly dog", "How to become an engineer" };

40

41 // Arrange

42 manager.AssignProbabilitiesFrom(corpus);

43

44 var nexts = manager.GiveNextWordsFrom("I want");

45

46 // Assert

47 Assert.AreEqual(nexts.Count, 0);

48 }

49}

We can confirm that all our tests pass successfully.

Before concluding this series, we must highlight some limitations of our implementation and examine why n-grams need to be carefully adapted to specific circumstances. This will be the focus of our final post.

Implementing the Google's autocomplete feature with ngram language models - Part 6