Implementing the Google's autocomplete feature with ngram language models - Part 4

In this post, we will build on the concepts covered in the previous posts and explore how they can be applied to n-gram language models.

What do probabilities have to do with Google's autocomplete feature ? This is what we will explore now.

This section is inspired by the book Speech and Language Processing.

How can we model the problem at hand ?

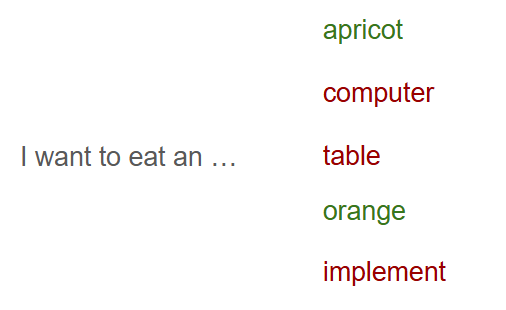

The Google autocomplete feature can be seen as predicting the next few words a user is likely to type. For instance, if we type "I am eating an", the next word is likely to be "apple" but much less likely to be "computer". But how can we formalize this intuition ? Simply by assigning a probability to each potential next word and then selecting the most probable one.

Models like that (that assign probabilities to sequences of words) are called language models or LMs. Here, we introduce the simplest model that assigns probabilities to sentences and sequences of words: the n-gram.

What are n-gram language models ?

We will now provide the formal definition of an n-gram model.

n-gram language models are statistical models used to predict the next word in a sequence based on the previous n-1 words. They are built by analyzing the probability of word sequences from a large corpus of text.

More precisely, an n-gram is a contiguous sequence of n items (words, in the case of language models) from a given text. The basic idea is that the likelihood of a word occurring depends on the preceding words.

In particular:

- A 1-gram (or unigram) model predicts the next word based solely on the frequency of individual words in the corpus.

- A 2-gram (or bigram) model predicts the next word based on the previous word (i.e., it looks at pairs of consecutive words).

- A 3-gram (or trigram) model predicts the next word based on the previous two words, and so on.

Thats sounds complicated ! Could you provide a more concrete explanation, please ?

Let's begin with a naïve example

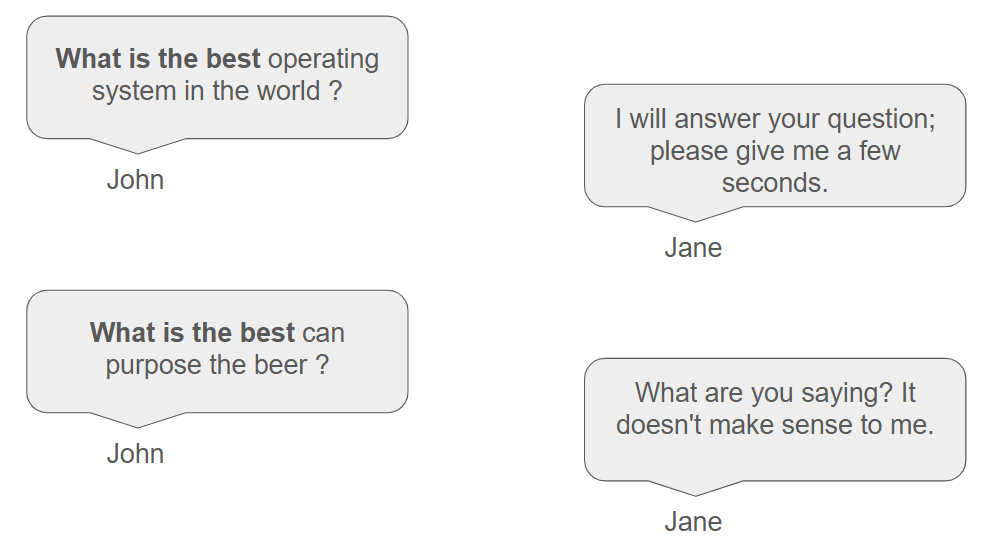

Imagine we're typing a query into the Google search engine, and the first words are, for example, "What is the best". As mentioned earlier, these words can be followed by certain words, but not by others.

Therefore, given that the first words are "What is the best", it is more likely that the next word will be "operating" rather than "can". In mathematical terms, we have: $P(operating|What is the best) > P(can|What is the best)$.

This means that for each query entered by a user, we need to assign a probability to each possible word in the vocabulary.

$$P(apricot|What is the best)=0.0637809$$ $$P(awful|What is the best)=0.0450639$$ $$...$$ $$P(can|What is the best)=0.0000002$$ $$...$$ $$P(zoo|What is the best)=0.0725298$$

A natural question that arises here is, of course, how to calculate these probabilities. One way to estimate them is from relative frequency counts: take a very large corpus, count the number of times we see "What is the best", and count the number of times this is followed by "operating" or "can". $$P(operating|What is the best) = \dfrac{number\thinspace of\thinspace times\thinspace we\thinspace see\thinspace What\thinspace is\thinspace the\thinspace best\thinspace operating}{number\thinspace of\thinspace times\thinspace we\thinspace see\thinspace What\thinspace is\thinspace the\thinspace best}$$

Unfortunately, although this method seems natural, it is doomed to fail because there would never be a large enough corpus to account for all possible combinations.

While this method of estimating probabilities directly from counts works fine in many cases, it turns out that even the web isn’t big enough to give us good estimates in most cases.

Speech and Language Processing

A smart approach to take

We will approach the problem in a slightly different way, but will still rely on conditional probabilities. Imagine that our query begins with a sequence of $n$ words $w_{1}...w_{n}$. What we ultimately want is to suggest the next word; mathematically, we want to estimate the joint probability $P(w_{1},...,w_{n},b)$ for all possible words $b$.

We can now use the chain rule of probability that we explored in the last post. $$P(w_{1},...,w_{n},b)=P(w_{1})P(w_{2}|w_{1})...P(b|w_{1}...w_{n})$$

There's nothing mysterious here; it is simply an exact formula.

The chain rule shows the link between computing the joint probability of a sequence and computing the conditional probability of a word given previous words.

Speech and Language Processing

However, from this point onward, we will rely on approximations. This is where the crucial idea of n-gram models comes into play. Instead of estimating the probability $P(b|w_{1}...w_{n})$ directly, we will approximate it by considering only the most recent words in the history, rather than the entire sequence. For example, we could approximate this probability by using only the previous word.

$$P(b|w_{1}...w_{n})\approx P(b|w_{n})$$

This approach is referred to as the bigram model. For example, in our case, we would have $P(operating|What is the best)\approx P(operating|best)$.

In other words, we are assuming that the next word in a sentence depends solely on the current word, rather than the entire preceding context. This assumption is commonly used in mathematical models and can be highly effective. It is called the Markov assumption.

Estimating such a probability becomes much easier because we require a much smaller corpus. However, as with most things, there's no free lunch: by relying solely on the current word, we may achieve suboptimal results. This is why practitioners often use trigrams or 4-grams instead of bigrams, as they provide much more accurate results.

With trigrams, we consider the current word and the one word preceding it (as opposed to just the current word in a bigram). More generally, with an n-gram model, we look at the current word and the previous n-1 words.

For trigrams $$P(b|w_{1}...w_{n})\approx P(b|w_{n-1}w_{n})$$

For 4-grams $$P(b|w_{1}...w_{n})\approx P(b|w_{n-2}w_{n-1}w_{n})$$

Evaluating these probabilities can be done as outlined earlier (the example below is for trigrams).

$$P(b|w_{n-1}w_{n}) = \dfrac{number\thinspace of\thinspace times\thinspace we\thinspace see\thinspace w_{n-1}w_{n}b}{number\thinspace of\thinspace times\thinspace we\thinspace see\thinspace w_{n-1}w_{n}}$$

From that, applying the chain rule of probability to calculate the probability of a given sentence becomes relatively straightforward. It is simply a matter of calculating the individual probabilities and multiplying them together.

$$\boxed{P(w_{1},...,w_{n},b)\approx P(w_{1})P(w_{2}|w_{1})P(w_{3}|w_{1}w_{2})P(w_{4}|w_{2}w_{3})...P(b|w_{n-1}w_{n})}$$

A worked example

It would be helpful to illustrate this topic with a concrete example. Although simple, it will clearly demonstrate how n-gram models function in practice.

To start, we need a corpus

To keep the computation straightforward, we will use a very small corpus consisting of just a few sentences. Additionally, we will focus exclusively on bigrams. And since we are working on Google's autocomplete feature, we will use query-like patterns for our examples.

Thus, we will assume that the following corpus has been generated from various queries in a search engine. Note that some queries may appear multiple times.

A common practice is to add special characters (often referred to as <s> and </s>) at the beginning and at the end of each sentence to represent a begin and an end marker.

| <s> I am silly \ |

| <s> I am mad </s> |

| <s> I am clever </s> |

| <s> I am silly </s> |

| <s> I am tall </s> |

| <s> I am tall </s> |

| <s> I am silly </s> |

| <s> I am tall </s> |

| <s> I am tall </s> |

| <s> I am tall </s> |

| <s> I have a silly dog </s> |

| <s> How to become an engineer ? </s> |

Next, we need to calculate the various probabilities

Here are the calculations for some of the bigram probabilities from this corpus.

$$P(I|<s>)=\dfrac{11}{12}$$ $$P(How|<s>)=\dfrac{1}{12}$$ $$P(silly|am)=\dfrac{3}{10}$$ $$P(</s>|silly)=\dfrac{3}{4}$$ $$P(am|I)=\dfrac{10}{11}$$ $$...$$

Finally, we can use these probabilities to make predictions

Now we can compute the probability of sentences like "I am silly" by simply multiplying the appropriate bigram probabilities together, as follows.

$$P(<s>I am silly</s>)=P(I|<s>)P(am|I)P(silly|am)P(</s>|silly)=\dfrac{11}{12}\dfrac{10}{11}\dfrac{3}{10}\dfrac{3}{4}$$ $$P(<s>I am silly</s>)=0.1875$$

In the next post, we will explore how to implement an n-gram model in C# in a practical way.

Implementing the Google's autocomplete feature with ngram language models - Part 5